Enhancing Legal Reasoning in Large Language Models

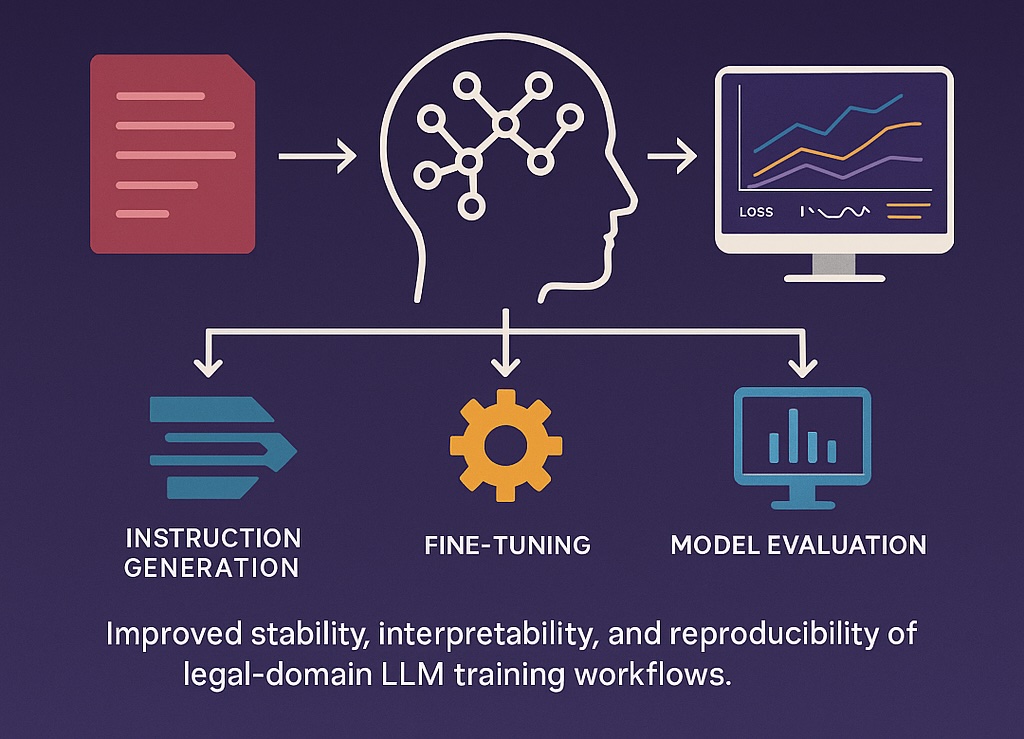

Bachelor thesis developing scalable instruction-generation and fine-tuning frameworks to improve legal reasoning capabilities of LLMs.

Project Overview

Bachelor Thesis at Tsinghua University AI Laboratories (Advisor: Prof. Qingyao Ai). Developed scalable instruction-generation pipelines and fine-tuning frameworks to strengthen legal reasoning in large language models. Designed and executed variance decomposition and convergence experiments to analyze model interpretability, conducting extensive cross-validation and parameter-sensitivity studies to assess stability and robustness. Built interactive dashboards for monitoring training analytics—visualizing learning curves, loss trajectories, and generalization metrics—to support data-driven debugging and iterative performance tuning. Authored comprehensive research documentation covering data-distribution analysis, hyperparameter optimization, and methodological reproducibility, improving clarity, transparency, and readiness for academic publication.

Technologies Used

Impact

Improved stability, interpretability, and reproducibility of legal-domain LLM training workflows.